Meme Search Engine

Do you have a large folder of memes you want to search semantically? Do you have a Linux server with an Nvidia GPU? You do; this is now mandatory.

Features

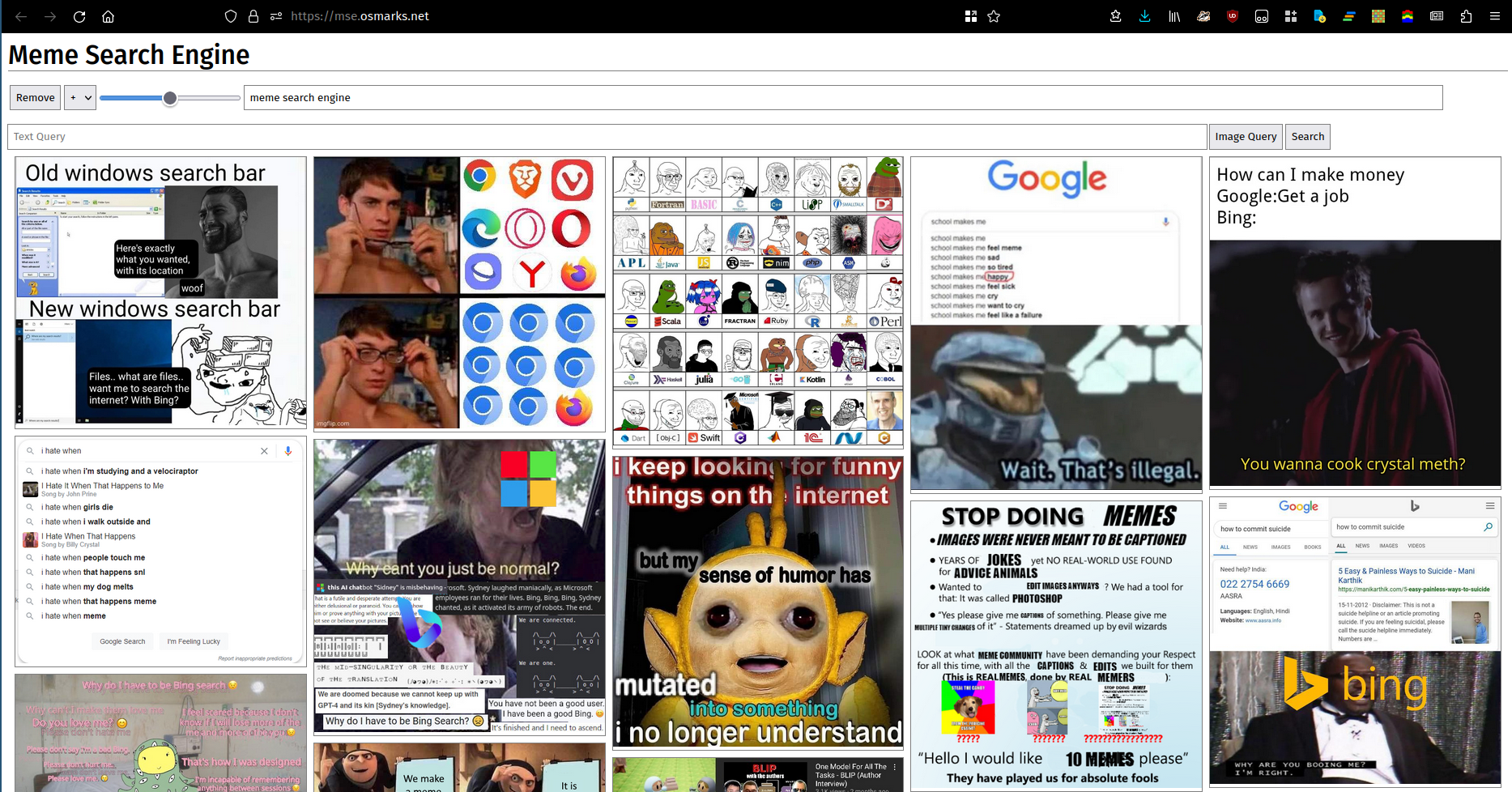

They say a picture is worth a thousand words. Unfortunately, many (most?) sets of words cannot be adequately described by pictures. Regardless, here is a picture. You can use a running instance here.

- Infinite-scroll masonry UI for dense meme viewing.

- Online reindexing (a good reason to use it over clip-retrieval) - reload memes without a slow expensive rebuild step.

- Complex query support - query using text and images, including multiple terms at once, with weighting (including negative).

- Reasonably fast.

Setup

New: somewhat tested Dockerized setup under /docker/. Note that you need a model like https://huggingface.co/timm/ViT-SO400M-14-SigLIP-384 (for timm), not one packaged for other libraries.

This is untested. It might work. The new Rust version simplifies some steps (it integrates its own thumbnailing).

- Serve your meme library from a static webserver.

- I use nginx. If you're in a hurry, you can use

python -m http.server.

- I use nginx. If you're in a hurry, you can use

- Install Python dependencies with

pipfromrequirements.txt(the versions probably shouldn't need to match exactly if you need to change them; I just put in what I currently have installed).You now need a patched version ofOpenCLIP supports SigLIP. I am now using that.transformersdue to SigLIP support.I have converted exactly one SigLIP model: https://huggingface.co/gollark/siglip-so400m-14-384. It's apparently the best one. If you don't like it, find out how to convert more. You need to download that repo.You can use any OpenCLIP model which OpenCLIP supports.

- Run

thumbnailer.py(periodically, at the same time as index reloads, ideally) - Run

clip_server.py(as a background service).- It is configured with a JSON file given to it as its first argument. An example is in

clip_server_config.json.deviceshould probably becudaorcpu. The model will run on here.modelisthe OpenCLIP model to usethe path to the SigLIP model repository.model_nameis the name of the model for metrics purposes.max_batch_sizecontrols the maximum allowed batch size. Higher values generally result in somewhat better performance (the bottleneck in most cases is elsewhere right now though) at the cost of higher VRAM use.portis the port to run the HTTP server on.

- It is configured with a JSON file given to it as its first argument. An example is in

- Build and run

meme-search-engine(Rust) (also as a background service).- This needs to be exposed somewhere the frontend can reach it. Configure your reverse proxy appropriately.

- It has a JSON config file as well.

clip_serveris the full URL for the backend server.db_pathis the path for the SQLite database of images and embedding vectors.filesis where meme files will be read from. Subdirectories are indexed.portis the port to serve HTTP on.- If you are deploying to the public set

enable_thumbstotrueto serve compressed images.

- Build clipfront2, host on your favourite static webserver.

npm install,node src/build.js.- You will need to rebuild it whenever you edit

frontend_config.json.image_pathis the base URL of your meme webserver (with trailing slash).backend_urlis the URLmse.pyis exposed on (trailing slash probably optional).

- If you want, configure Prometheus to monitor

clip_server.py.

MemeThresher

Note: this has now been superseded by various changes made for the scaled run. Use commit 512b776 for old version.

See here for information on MemeThresher, the new automatic meme acquisition/rating system (under meme-rater). Deploying it yourself is anticipated to be somewhat tricky but should be roughly doable:

- Edit

crawler.pywith your own source and run it to collect an initial dataset. - Run

mse.pywith a config file like the provided one to index it. - Use

rater_server.pyto collect an initial dataset of pairs. - Copy to a server with a GPU and use

train.pyto train a model. You might need to adjust hyperparameters since I have no idea which ones are good. - Use

active_learning.pyon the best available checkpoint to get new pairs to rate. - Use

copy_into_queue.pyto copy the new pairs into therater_server.pyqueue. - Rate the resulting pairs.

- Repeat 4 through 7 until you feel good enough about your model.

- Deploy

library_processing_server.pyand schedulememe_pipeline.pyto run periodically.

Scaling

This repository contains both the small-scale personal meme search system, which uses an in-memory FAISS index which scales to perhaps ~1e5 items (more with some minor tweaks) and the larger-scale version based on DiskANN, which should work up to about ~1e9 and has been tested at hundred-million-item scale. The larger version (accessible here) is trickier to use. You will have to, roughly:

- Download a Reddit scrape dataset. You can use other things, obviously, but then you would have to edit the code. Contact me if you're interested.

- Build and run

reddit-dumpto do the download. It will emit zstandard-compressed msgpack files of records containing metadata and embeddings. This may take some time. Configuration is done by editingreddit_dump.rs. I don't currently have a way to run the downloads across multiple machines, but you can put the CLIP backend behind a load balancer.- Use

genseahash.pyto generate hashes of files to discard.

- Use

- Build and run

dump-processor -son the resulting zstandard files to generate a sample of ~1 million embeddings to train the OPQ codec and clusters. - Use

dump-processor -tto generate a sample of titles andgenerate_queries_bin.pyto generate text embeddings. - Use

kmeans.pywith the appropriaten_clustersto generate centroids to cluster your dataset such that each of the clusters fits in your available RAM (with some spare space). Note that each vector goes to two clusters. - Use

aopq_train.pyto train the OPQ codec on your embeddings sample and queries. A GPU is recommended, as I bruteforced some of the problems involved with computing time. - Use

dump-processor -C [centroids] -S [shards folder]to split your dataset into shards.- The shards will be about twice the size of the dump files.

- You may want to do filtering at this point. I don't have a provision to do proper deduplication or aesthetic filtering at this stage but you can use

-Eto filter by similarity to embeddings. Make sure to use the same filtering settings here as you do later, so that the IDs match.

- Build

generate-index-shardand run it on each shard with the queries file from earlier. This will generate small files containing the graph structure. - Use

dump-processor -s [some small value] -jto generate a sample of embeddings and metadata to train a quality model. - Use

meme-rater/load_from_json.pyto initialize the rater database from the resulting JSON. - Use

meme-rater/rater_server.pyto do data labelling. Its frontend has keyboard controls (QWERT for usefulness, ASDFG for memeness, ZXCVB for aesthetics). You will have to edit this file if you want to rate on other dimensions. - Use

meme-rater/train.pyto configure and train a quality model. If you edit the model config here, edit the other scripts, since I never centralized this.- Use one of the

meme-rater/active_learning_*.pyscripts to select high-variance/high-gradient/high-quality samples to label andcopy_into_queue.pyto copy them into the queue. - Do this repeatedly until you like the model.

- You can also use a pretrained checkpoint from here.

- Use one of the

- Use

meme-rater/ensemble_to_wide_model.pyto export the quality model to be useable by the Rust code. - Use

meme-rater/compute_cdf.pyto compute the distribution of quality for packing bydump-processor. - Use

dump-processor -S [shards folder] -i [index folder] -M [score model] --cdfs [cdfs file]to generate an index file from the shards, model and CDFs. - Build and run

query-disk-indexand fill in its configuration file to serve the index. - Build and run the frontend in

clipfront2.